Nuitrack: 0.35.7

Unity: 2020.3.13f1

UE4: 4.26

I am using Nuitrack AI with the Unity SDK. I should note that I also have done the base setup in Unreal Engine 4 and am getting similar quality issues. I am attempting to simply match the user to the rig, but am significant issues tracking the character that aren’t present in the tutorials present in the Unity Avatar Animation Tutorial:

We tried upgrading to Nuitrack AI from the trial to see if the quality would improve, without much effect. I set up Nuitrack AI using the instructions in this forum reply by a member of the Nuitrack team, as I could not find any other information on setup in the documentation. No other changes have been made to the Nuitrack config.

The issues we are seeing are most visible with the Unity Tutorial AvatarAnimation and in the scene RiggedModel2 in the unity package provided in the SDK. These issues are showing in an unedited scene in unity package provided on the github

A comparison of quality can be seen between the tutorial and my attempted recreation.

Nuitrack tutorial GIF example

Here is what I’m getting locally attempting the same movements in the same scene

All this comes to one question: What do I need to do to improve performance?

Which leads me to these sub-questions:

- Is it worth reinstalling?

- Is there a best practices document for height/angle of camera and what’s in the background to improve reading? We will likely need to work from a low/steep angle for our use case.

- Are there more steps to activating nuitrack AI than that post implies? Where do I find that information if so?

- Are there certain settings on the Nuitrack Scripts prefab that I need to work with? I can find no single piece of documentation on each of the variables on it.

Hello @ADecker_Roto

Which sensor do you use?

And please send screenshot with depth from Program Files\Nuitrack\nuitrack\nuitrack\bin\nuitrack_sample.exe

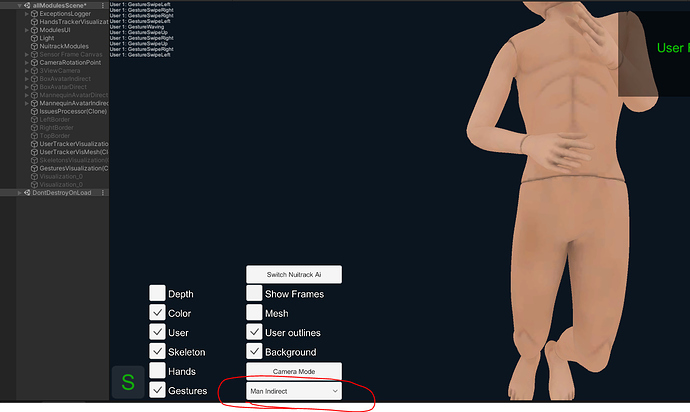

Direct mapping is very sensitive to joint stability. Indirect mapping is probably better for you. You can test different types of mapping on the AllModulesScene stage

Is there a best practices document for height/angle of camera and what’s in the background to improve reading? We will likely need to work from a low/steep angle for our use case.

Documentation

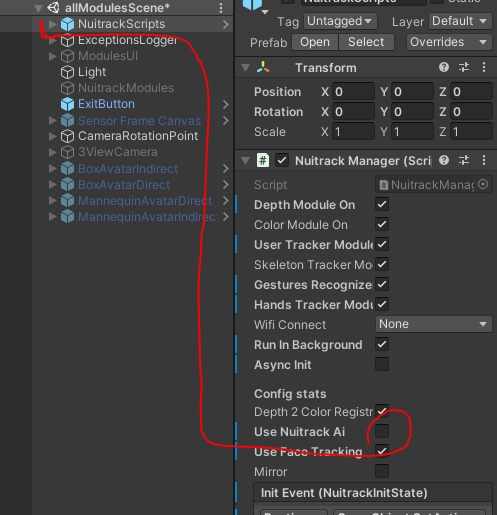

Are there more steps to activating nuitrack AI than that post implies? Where do I find that information if so?

No. It’s works.

Alternatively you can just enable ai in this component, without editing the nuitrack.config (But if the algorithm is already enabled in the nuitrack.config, then its value will be considered a priority)

Hello @Stepan.Reuk,

Thanks for the fast reply.

Which sensor do you use?

We are using an Azure Kinect DK Model 1880.

And please send screenshot with depth from Program Files\Nuitrack\nuitrack\nuitrack\bin\nuitrack_sample.exe

Here is a link to a gif of me running the nuitrack_sample.exe. Still having some jittering there as well.

Direct mapping is very sensitive to joint stability. Indirect mapping is probably better for you. You can test different types of mapping on the AllModulesScene stage

After testing the AllModulesScene I had some success over the tutorials also provided in the SDK, I wasn’t anticipating a difference in scripts in the demos at all, much less such a quality difference in scripts between The All Modules Scene approach to indirect mapping vs. the Tutorials/AvatarAnimation/ScenesRiggedModel scene approach to animating a skeleton. I think I might end up editing it to lerp joints to the position rather than write them immediately to keep removing jitter.

No. It’s works. Or you can enable ai in this component

So there is an additional step beyond the forum post you need to do. This might be useful to put in the Unity/Unreal Setup docs. Speaking of…

What is the process for setting up indirect mapping of data onto a skeleton mesh in unreal? I saw that the documentation for unreal not as expansive as the Unity Document. Is there an easy way to get Unreal to read a skeleton and have the quality improvements we mentioned?