When trying to read out the userposition it differs a lot even if the user is standing still as much as possible.

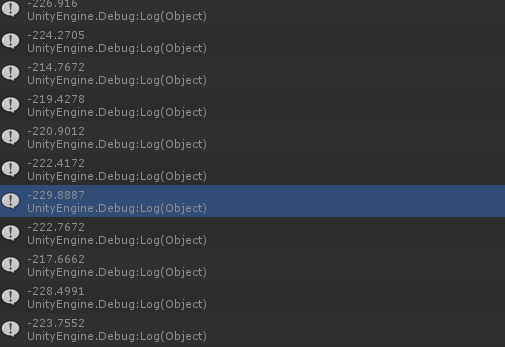

We’re trying to move an object with the user and when the user is standing still, we receive data differing up to 10 pixels from the last data. Example:

That data is from an update debuggin the user position when the user is standing as still as possible. the object moving with this user is shaking quite a lot.

EDIT: This also happens for the proj data. I multiply the proj data by screen size and get the same inconsistent data

Hi Patrick,

Please provide us with the script (or, at least, its part) so we can investigate this issue.

It’s nothing really, it’s the exact same data as I get directly from Nuitrack.

private void SetUserStatus(UserFrame frame)

{

List<UserData> userData = new List<UserData>();

foreach (User user in frame.Users)

{

userData.Add(new UserData(user.ID, user.Real.ToVector3(), user.Proj.ToVector3()));

}

And then I just debug both the user.real.tovector3, and the user.proj.tovector3. It then gets passed along through a few scripts and used in a game, but the data I get right out of Nuitrack is already wrong. This is the update event from Nuitrack by the way. So _userTracker.OnUpdateEvent += SetUserStatus;

Actually, this is a normal behavior. You receive the coordinates of a segment center. The “center” of the segment image of a user standing in a standard pose would be somewhere around the stomach; if a user raises his arms, the “center” is moved up as well and located somewhere around his face. So, center of a segment and center of a user would be different.

We’d recommend you to use the coordinates of skeleton joints (see our “RGB and Skeletons” tutorial project for 2D or “First Project” for 3D).

Well, we have the same with hand data, so this means we are forced to use skeletons even though we only need the hand/user tracker. Which is unnecessary performance loss imo… Anything we can do to work around this? Smoothing it up a bit?

Also, how do I go from proj coordinates(the 0,1) to coordinates I can use in the scene? I tried multiplying the proj coordinate by the screen.width&height. But this only works for one side of the screen.

Yes, smoothing should help. Nuitrack skeleton is smoothed automatically, no additional smoothing is needed.

As for the second question, this option should work fine:

new Vector2(projPos.x Screen.width, projPos.y Screen.height);

Any idea on how to smoothen that data?

Also the 0,1 works now, my scene was set wrong. Mybad!

Usually, smoothing is performed using the Lerp Unity function.

If you need to smooth the vectors, you can do it the following way:

transform.localPosition = Vector3.Lerp(transform.localPosition, newPosition, Time.deltaTime * 1f);

Let us know if you have any questions regarding Nuitrack operation.

I actually do. I just got the chance to try the lerp function and it doesn’t help much. We either lerp it so hard it becomes very slow and doesn’t feel responsive anymore, or it keeps the shakyness but just smoother. I also tried only using the new user position if it differs enough from the previous one like this:

if (Vector3.Distance(_lastpos, user.Proj.ToVector3()) >4f)

Thats works for the shaking, but that results in jumping from the data if you move slower. I tried a lot more but running out of ideas. Any solution to try? We would like to filter out the data BEFORE the movement is made. We pass Nuitracks data into our own data class(this happens inside the OnUserUpdate) so we would like to filter this out before passing it to our own data class. Right now we just simply pass the user.proj.tovector3 to our own vector3. How is this done with the skeleton which has smoothed automatically? I can only imagine you guys are using something simular there. I must be missing something. It’s very important for us that everything moves smoothly yet feels responsive, so no delays. It’s a mini game in which the user needs to hit objects with his body.

EDIT: Anyone?

2 Likes