i am trying to create a virtual fitting room by following the tutorial and referencing the existing sample given by the SDK. But whenever there are other person with different body type tried to use the virtual fitting room, it’s pretty mismatch on some part of the bones, and sometimes even slightly uncentered. Is there any way to fine tune the nuitrack avatar script? Thank you

Some of these pictures are taken from different locations, but they still use the same sensor. The sensor is Intel Realsense D435. We want to fine tune the bones so that the neck and other body parts could be more precise. Sorry, i can only embed one image per post since my account is limited

hii @Stepan.Reuk so the issue is that currently it’s only tracking a certain height. I read that we are suppose to be able to track height of 140-200cm? the issue is if someone taller or shorter than the default, the clothes will not “scale” properly.

We also saw some issue where if someone is wearing a dress, nuitrack can’t recognise the person as well.

Would really help if you can help us solve these two issues.

Thank you

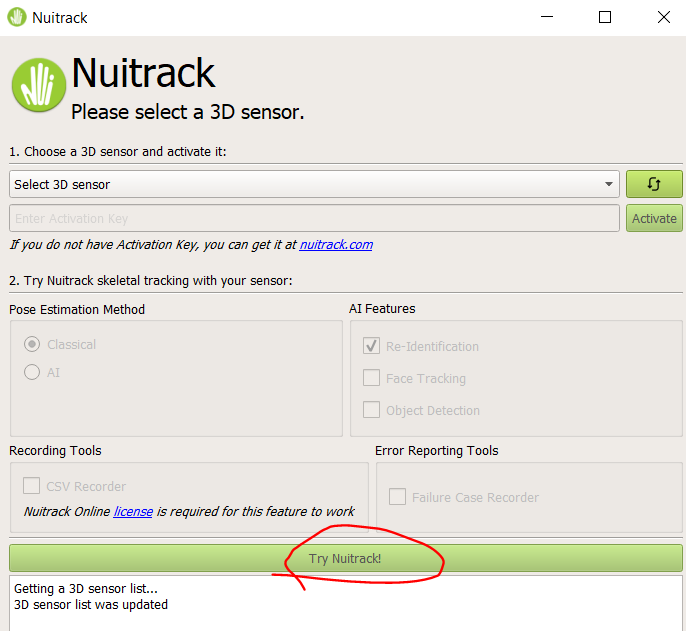

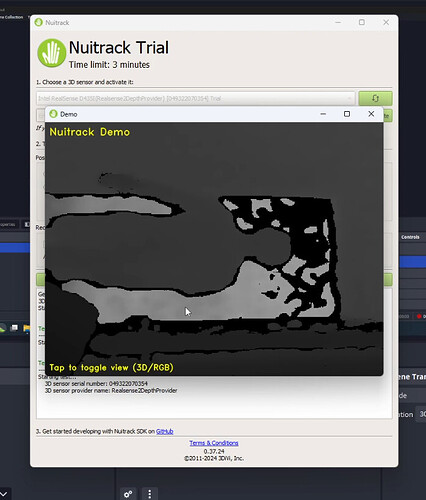

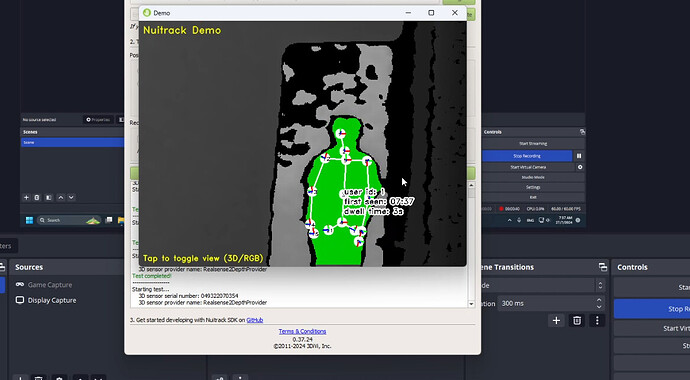

- Check outside of Unity. Run the tracking example, for example through the Nuitrack Activation Tool. Is the skeleton applied to the person correctly?

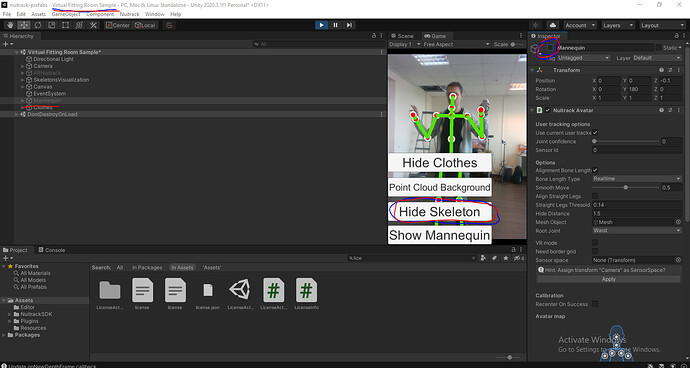

- Try to run the Virtual Fitting Room Sample (In Unity). And leave only the visualization of the skeleton.

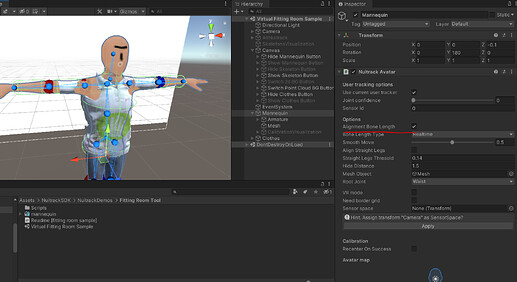

- Turn on the mannequin visualization

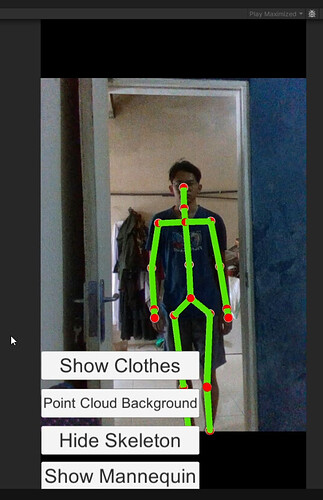

We are using portrait mode for our current project. And after using nuitrack tools, the bones looks fine in landscape mode on nuitrack tools, but can’t detect on portrait mode. After using the mannequin sample, it still doesn’t match quite well with the real body.

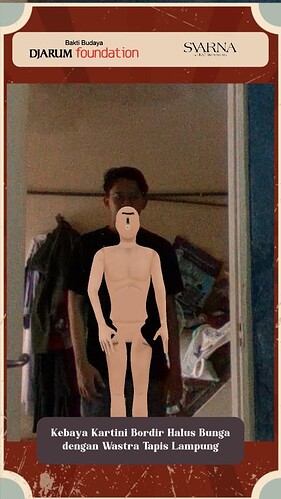

We noticed a few things, we were trying to scale the RGB and that causes the rig not scalling up properly. But unlike the sample given, when the person moves around the camera, the clothes doesn’t match properly, for someone taller, they have to move further back, for someone shorter, they have to be more up front. Is there any way to solve this? we want whoever stand on a position, the clothes should be able to fit in. We are trying to match all the settings from the sample to ours and we can’t figure out why. Is there any way to help reduce this offset?

It looks as if the size of the bones of the clothing model simply does not change.

But let’s try to go step by step. Let’s try to check only the skeleton, without clothes. Run the Virtual Fitting Room Sample in the editor. And do as shown in the screenshot.

(here the sensor is physically rotated 90 degrees and Nuitrack includes what needs to be rotated 90 degrees. Looks quite accurate)

Yes, this looks quite accurate

but what we want to do is that, we want to fine tune some part of the clothes so it looks accurate. Like the neck for example, we just want to offset the neck area of the model object by a little. We were thinking maybe we can offset the bone or the rig so in unity so it could be more tuned.

First of all, it was necessary to make sure that the skeleton works correctly, because it is on its basis that bone scaling will work.

Everything looks good now. I think the skeleton will look exactly the same on a person of any size (within reasonable limits).

You can verify this if you enable the avatar display in this scene. In essence, this is the same model as you use for clothing, but it includes an increase in the bones of the model depending on the distance between the joints.

If this option is enabled, then such a problem should not exist if the model was correctly configured in the 3D editor

You can verify this if you enable the avatar display in this scene. In essence, this is the same model as you use for clothing, but it includes an increase in the bones of the model depending on the distance between the joints.

If this option is enabled, then such a problem should not occur if the model was correctly configured in the 3D editor.

Try placing your clothes on this scene, turn on this checkbox (align bone length) on your clothes, run the scene and leave only the RGB background, skeleton (3D) and your clothes. If everything looks normal (skeleton, mannequin, background), but your model has some inaccuracies, it is better to correct it in a 3D editor (for example, blender).

thank you so much for the help. Really appreciate it.

what happens, if it’s still not scaling properly…I tried the alignment bone length but it’s not working properly…does that mean I need to go back and re rig it in blender?

@VridayStudio If the mannequin model from our package scales correctly (on Virtual Fitting Room Sample), and your clothing model is configured correctly in Unity, but there are still problems, then most likely you need to edit it in a 3D editor (blender).

You can send us a Unity project (the whole project or a minimally reproducible example) and we will try to give recommendations.

Can it be calibrated through the positioning of the camera, or the pivot point of the RGB frame or the aspect ratio in Nuitrack Aspect Ratio Fitter?

The weird thing is, at one point it’s scaling quite properly then another day the rig move down and to the right a bit. We have no clue what happened as we didn’t do anything with nuitrack, was just working on the UI and we didn’t move the camera too.

We found out that by fiddling with those numbers we can kinda get it to fit more to our body. But i wonder if what we did is right or not.

Thank you for your help, here are the projects on github : GitHub - Vriday-Studio/project-costume: Virtual fitting room using intel realsense sensor

The main scene is on Assets/Scenes/KebayaKala. You can just play in editor to see how the project works. We are using Intel Realsense D435 and D435i in this project. Another thing we do is also updating rigs and models as you’ve suggested but still we don’t see much improvement and somehow the project is inconsistent on its sensory function.